Before I could start discussing about AI, we need to define ‘What is AI?’. Well there are many definitions of AI out of which the most modern one is ‘the science of designing a system that is organized into four categories - systems that think like humans, act like humans, think rationally & act rationally’.

Automatic steering of ships has been a goal of seafarers for many years. Imperatives such as reduced manning and increasing fuel costs has led to innovative designs from the classic PID(proportional–integral–derivative) controller to adaptive and robust control and latterly to intelligent control . The intention is to present an overview of autopilot development and to illustrate how the so-called intelligent paradigms of fuzzy logic and artificial neural networks have been employed for intelligent ship steering, along with their limitations.

The process of automatic steering of ships has its origin several centuries ago to the time when early fishermen would bind the tiller or rudder of their boats in a fixed position, to produce an optimal course, in order to release extra manpower to assist with launching and recovery of nets. Most likely the criteria for selecting the optimal course would be to minimize induced motions and to maintain a course which would help the deployment or recovery of the nets. This was the case before Industrial revolution. It was not until after the revolution that methods for automatically steering of ships were first contemplated, and the first ship autopilots came into use during the first part of the twentieth century.

The main difficulty in discussing intelligent approaches to autopilot design(in general, to any AI design) is that of defining what it is meant by intelligent or intelligent control and stems from the fact that there is no general agreement upon definitions for human intelligence and intelligent behaviour.

One of the earliest definitions of the criterion for ‘machine intelligence’, is that by Turing who expressed this criterion as the well-known Turing test which is undertaken in the following manner: A person communicates with a computer through a terminal. When the person is unable to decide whether she/he is talking to a computer or another person, the computer can safely be said to possess all the important characteristics of intelligence*.

One of the earliest definitions of the criterion for ‘machine intelligence’, is that by Turing who expressed this criterion as the well-known Turing test which is undertaken in the following manner: A person communicates with a computer through a terminal. When the person is unable to decide whether she/he is talking to a computer or another person, the computer can safely be said to possess all the important characteristics of intelligence*.

The viewpoint adopted throughout is that intelligent control is the discipline that involves both artificial intelligence and control theory, the design of which should be based on an attempt to understand and duplicate some or part of the phenomena that ultimately produces a kind of behaviour that can be termed ‘intelligent’, i.e. generalisation, flexibility adaptation etc. The two paradigms which are generally accepted as matching or replicating these intelligent characteristics are fuzzy logic and artificial neural networks. Over the last twenty years or so there has been an explosion in interest in applying intelligent control to a wide range of application areas, including ship autopilot design with the motivation being their robustness qualities that can more effectively cope with the non-linear and uncertain characteristics of ship steering. Some of the approaches in this regard are-

Ø Fuzzy logic approaches - The use of fuzzy set theory as a method for replicating the non-linear behaviour of an experienced helmsman is perhaps the most appropriate application of this technique. Fuzzy rules of the type: “IF heading error is positive small AND heading error rate is positive big THEN rudder angle is positive medium”, typify the actions of an experienced helmsman. The schematic of a fuzzy logic controller is shown in Fig. 1 where the conventional controller block is replaced by a composite block comprising four components:

• The rule base that holds a set of ‘‘if–then’’ rules which are quantified through appropriate fuzzy sets to represent the helmsmans knowledge.

• The fuzzy inference engine that decides which rules are relevant to a particular situation or input, and applies actions indicated by these rules.

• Input fuzzification, which converts the input value into a form that can be used by the fuzzy inference engine to determine which rules are relevant.

• Output defuzzification, which combines the conclusions reached by the fuzzy inference engine to produce the input rudder demand. It is these four blocks, acting together, which encapsulate the knowledge and experience of the helmsman.

Ø Neural network approaches - Human brain comprises of approximately 10^11 neurons, each of which consists of a cell body, numerous fibres (dendrites) extending from the cell body and a long fibre (axon) which carries signals to other neurons. The axon branches into strands and sub-strands at the end of which are synapses that form the weighted connection between neurons (each making a few thousand synapses with other neurons) & hence process of sending a signal from one neuron to another is a complex electro-chemical process. However, the principle is that if the weighted sum of the inputs arriving at a cell in any time instant is above a threshold level the neuron ‘fires’ and sends a signal along its axon to the synapses with other neurons resulting of the complex neuron interconnection structure described above, data patterns representing a particular event will have unique propagation paths through the brain. The most widely used ‘feed forward’ layered network or multi-layer perception is an example of such a network (as in fig.2). Here the circles are the simulated neurons and the links represent the weighted connections, information flow is in one direction only. It should be noted that there are several neuron simulation models and a wide range of neuron interconnection structures. For example, recurrent networks are configured with internal connections that feedback to other layers or themselves.

Clearly the neural network shown in Fig. 2 varies considerably in size, structure and complexity from the biological neurons described above. Despite this fundamental difference such networks have been trained to approximate continuous nonlinear functions and have been used successfully in a wide range of applications. Training involves supervised learning, reinforcement learning or unsupervised learning. The former involves providing the network with two data patterns, the input pattern and its corresponding output pattern. This enables a function called the teacher to be derived which enables adjustment of the interconnection weights in order to minimise the error between the actual and the desired output. Reinforcement learning, or learning with a critic, works by deriving an error when an input target is not available for training. In this case the neural network obtains an error measure from an application dependant performance parameter, weight connections are adjusted and the network receives a reward/penalty signal. Training proceeds so as to maximise the likelihood of receiving further rewards and minimising the chance of penalty. With unsupervised learning there is no external error feedback signal to aid classification, as in pattern recognition applications. In this case the network is required to establish similarities and regularities in the input data sequence.

Some approaches to neural network based autopilots use neural networks to mimic the actions of the existing autopilot. Such autopilots are trained by subjecting the neural network to wide range or operational conditions thereby enabling the neural network to provide satisfactory control action for conditions for which it has not been trained explicitly. Other approaches are to use neural network mappings which generate controller parameters and/or state estimation from measured system performance in order to provide direct adaptive control. Alternatively, the neural network is configures to produce a mapping which relates actual performance to an accurate set of model parameters i.e. indirect adaptive control.

Some approaches to neural network based autopilots use neural networks to mimic the actions of the existing autopilot. Such autopilots are trained by subjecting the neural network to wide range or operational conditions thereby enabling the neural network to provide satisfactory control action for conditions for which it has not been trained explicitly. Other approaches are to use neural network mappings which generate controller parameters and/or state estimation from measured system performance in order to provide direct adaptive control. Alternatively, the neural network is configures to produce a mapping which relates actual performance to an accurate set of model parameters i.e. indirect adaptive control.

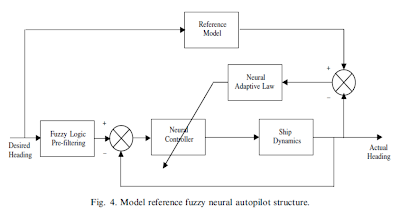

Examples of indirect and directive adaptive control approaches for intelligent ship autopilots is given where a model reference adaptive control architecture is proposed, utilising neural networks to provide approximate mappings of the control and to implement the adaptive law (Fig. 3). Other combination of neural networks and fuzzy logic was proposed where fuzzy decision making is used for the adaptive law and also to filter (shape) the rudder demand which is necessary to prevent rudder actuator saturation (Fig. 4).

Ø Neurofuzzy approaches - The attractiveness of combining the transparent linguistic reasoning qualities of fuzzy logic with the learning abilities of neural networks to create intelligent self-learning controllers has, over the last decade, led to a wide range of applications. Such approaches have brought together the inherently robust and non-linear nature of fuzzy control with powerful learning methods through which the deficiencies of traditional fuzzy logic designs may be overcome. Many of the proposed fusions may be placed into one of two classes: either networks trained by gradient descent or reinforcement paradigms, although some methods combine these learning techniques. Whatever training method is chosen the parameters within the fuzzy controller which are to be ‘tuned’ must be selected.

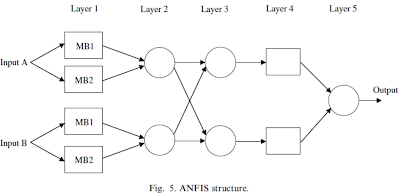

One of the most useful and much used combinations of neural networks and fuzzy logic is the Adaptive Network Based Fuzzy Inference System (ANFIS) proposed by Jang whereby, the fuzzy consequences of first-order Sugeno-type rules of the form:

One of the most useful and much used combinations of neural networks and fuzzy logic is the Adaptive Network Based Fuzzy Inference System (ANFIS) proposed by Jang whereby, the fuzzy consequences of first-order Sugeno-type rules of the form:

are tuned using neural networks. Fig. 5 shows the basic ANFIS architecture where the fuzzy inference engine comprises a layered, feedforward network with some of the parameters represented by adjustable nodes (rectangles) and fixed nodes (circles). Data enters the network at layer 1, the nodes containing the membership functions. The second layer combines the possible input membership grades and computes the firing strength of the rule. Layer 3 is a normalising function producing normalised firing strengths. Layer 4 contains the fuzzy consequences, the outputs of which are aggregated as a weighted sum in Layer 5.

As discussed above, an intelligent autopilot can be seen as an advantageous combination of different control design techniques and searching algorithms that will lead to the attainment of the control objectives. As a consequence an intelligent controller is a highly non-linear, often time variant controller and therefore the stability analysis of such control systems is neither straightforward nor general as it is in the case of linear time invariant systems, where stability is a global and a well-defined property of the system. The difficulty in studying the analytical behaviours of combinations of neural networks and fuzzy logic based control systems has led to the practice of using extensive simulation trials to show the acceptable performances and the viability of the proposed intelligent control system.

In the simplest control structure, neural networks and fuzzy logic systems are acting as non-linear elements in the loop. Therefore classical approaches, such as describing functions or the Popov criteria**, can be applied to analyse the stability of such systems while more sophisticated techniques like the Lyapunov stability theory** can be used for both analysis and synthesis of non-linear control systems in terms of boundedness of control signal and tracking error.. Under a practical point of view, the approximation quality of the intelligent controller raises the questions on how many nodes of the networks and what kind of learning algorithms have to be used(inorder to prove that the error representing the non-linear plant dynamics, approximated by neural networks or fuzzy logic system, converges to zero).

A comparison of different neural network structures, suggests that radial basis function neural networks with fixed input parameters are suitable for stability analysis while multilayer perceptron neural networks trained with the back-propagation algorithm due to their highly non-linear nature are not suitable for this purpose.

In the simplest control structure, neural networks and fuzzy logic systems are acting as non-linear elements in the loop. Therefore classical approaches, such as describing functions or the Popov criteria**, can be applied to analyse the stability of such systems while more sophisticated techniques like the Lyapunov stability theory** can be used for both analysis and synthesis of non-linear control systems in terms of boundedness of control signal and tracking error.. Under a practical point of view, the approximation quality of the intelligent controller raises the questions on how many nodes of the networks and what kind of learning algorithms have to be used(inorder to prove that the error representing the non-linear plant dynamics, approximated by neural networks or fuzzy logic system, converges to zero).

A comparison of different neural network structures, suggests that radial basis function neural networks with fixed input parameters are suitable for stability analysis while multilayer perceptron neural networks trained with the back-propagation algorithm due to their highly non-linear nature are not suitable for this purpose.

From the overview of approaches to design of intelligent autopilots it is seen that such advanced control systems are often the result of an advantageous combination of different control design techniques that tries to act and make decisions like an experienced helmsmen. But, stability considerations issues associated with using intelligent paradigms for ship autopilots have been raised because in the unpredictable critical and emergency situations it cannot think like a human(of course!!) and hence it is suggested that further work on stability analysis of such intelligent control systems is vital only if they are to become accepted, particularly in certificated and safety critical applications.

But it is clear that in comparison to PID autopilots(i.e., with just the feedback network) intelligent autopilots offer considerable improvements in performance and as such represent a viable alternative to PID designs. Also from above analysis, it is shown how well-known methods of non-linear analysis may be used to design a stable intelligent autopilot for ships. The functional equivalence between certain neural networks and fuzzy logic systems can be used to extend and generalise analytical results from one field to the other. However, until the stability issue of intelligent control is properly addressed and generic solutions formulated, these kinds of advanced control systems cannot be fully developed and will not gain acceptance(especially in certified and safety-critical applications).

Future developments in intelligent autopilots will undoubtedly evolve in a similar way in which the intelligent control community is evolving where incorporation of ideas and methodologies based on learning control and intelligent decision making are gaining increasing popularity.

Future developments in intelligent autopilots will undoubtedly evolve in a similar way in which the intelligent control community is evolving where incorporation of ideas and methodologies based on learning control and intelligent decision making are gaining increasing popularity.

*This test clearly emphasises the external behaviours requested for a computer (or machine) to be safely defined as an intelligent machine. These external behaviours do not have to be distinguished from that of a human being and most importantly this has to happen from a human point of view (that is the observer).

**Popov criteria is used as non-linear feedback design tools for getting approximate solutions of an equation by comparing with the exact values & iterate till we reach a reasonable error to be neglected. Lyapunov theory is concerned with the stability analysis for giving an estimate of how quickly the solutions converge to a required value.

Both these methods are employed in case of non-linear & time-variant systems.

References:

[1] Bennet A. A history of control engineering 1800–1930. Peter Peregrinus Ltd; 1997.

Both these methods are employed in case of non-linear & time-variant systems.

References:

[1] Bennet A. A history of control engineering 1800–1930. Peter Peregrinus Ltd; 1997.

[2] Fossen TI. A survey of non-linear ship control: From theory to practice. Proc. 5th IFAC conference

on Manoeuvring and Control of Marine Craft (MCMC2000), 2000. p. 1–16.

on Manoeuvring and Control of Marine Craft (MCMC2000), 2000. p. 1–16.

[3] Allensworth T. A short history of Sperry Marine, 1999. http://www.sperry-marine.com/pages/history.html.

[4]Wikipedia : for defining PID controller, Popov criteria and Lyapunov theory.

[5]Introduction to AI(.ppt) by Prof.Dechter, University of California : for the definition of AI & Turing test.

[4]Wikipedia : for defining PID controller, Popov criteria and Lyapunov theory.

[5]Introduction to AI(.ppt) by Prof.Dechter, University of California : for the definition of AI & Turing test.

[6] Chen FC, Khalil HK. Adaptive control of non-linear systems using neural networks.

By,

Syed Ashruf,

AE09B025.

By,

Syed Ashruf,

AE09B025.

11:12 PM

11:12 PM

Jam Jacobs

Jam Jacobs